Fast Visual Motion Control

A key capability for the next wave of robotic systems is the ability to move dynamically and naturally in a complex environment. Robotic vision is core to all activities in this project, and we consider novel vision sensors such as event cameras and plenoptic cameras.

Team Members

Nick Barnes

Australian National University (ANU), Australia

Nick Barnes is an Associate Investigator with our Centre. He received a PhD in computer vision for robot navigation in 1999 from the University of Melbourne. He was a visiting research fellow at the LIRA-Lab at the University of Genoa, Italy, supported by an Achiever Award from the Queens’ Trust for Young Australians before returning to Australia to lecture at the University of Melbourne, before joining NICTA’s Canberra Research Laboratory, now known as Data61@CSIRO.

He is currently a senior principal researcher and research group leader in computer vision for Data61 and ab Adjunct Associate Professor with the Australian National University. His research interests include visual dynamic scene analysis, wearable sensing, vision for low vision assistance, computational models of biological vision, feature detection, vision for vehicle guidance and medical image analysis.

Jochen Trumpf

Australian National University (ANU), Australia

Jochen Trumpf received the Dipl.-Math. and Dr. rer. nat. degrees in mathematics from the University of Wuerzburg, Germany, in 1997 and 2003, respectively. He is currently the Director of the Software Innovation Institute at the Australian National University.

His research interests include observer theory and design, linear systems theory and optimisation on manifolds with applications in robotics, computer vision and wireless communication.

Tom Drummond

Monash University, Australia

Professor Drummond is a Chief Investigator based at Monash. He studied a BA in mathematics at the University of Cambridge. In 1989 he emigrated to Australia and worked for CSIRO in Melbourne for four years before moving to Perth for his PhD in Computer Science at Curtin University. In 1998 he returned to Cambridge as a post-doctoral Research Associate and in 1991 was appointed as a University Lecturer. In 2010 he returned to Melbourne and took up a Professorship at Monash University. His research is principally in the field of real-time computer vision (ie processing of information from a video camera in a computer in real-time typically at frame rate), machine learning and robust methods. These have applications in augmented reality, robotics, assistive technologies for visually impaired users as well as medical imaging.

Yonhon Ng

Australian National University (ANU), Australia

Yonhon Ng joined the Centre as a research fellow at the Australian National University (ANU) in January 2019. He is working on the Fast Visual Motion Control project under the supervision of Prof. Robert Mahony. He completed his PhD degree at the ANU under the supervision of Dr. Jonghyuk Kim, Prof. Brad Yu and Assoc. Prof. Hongdong Li. He also obtained his Bachelor of Engineering (R&D) at the ANU and was awarded with a University Medal.

Yonhon’s research interests include state estimation and control, 3D computer vision and robotics. He enjoys playing badminton, fishing and cooking during the weekend.

Alex Martin

Australian National University (ANU), Australia

In 2008 I started with the ANU in the Computer Vision and Robotics group lead by Prof Rob Mahony, Jonghyuk Kim, Hongdong Li and Jochen Trumpf. Since starting at the ANU, the Centre of Excellence for Computer vision has been established and I have been involved with many projects within the centre including stereo vision water detection, multi camera array sensors, high speed optical flow hardware on quad rotors and work with light field cameras. Our lab has a 4WD vehicle that is used for acquiring various data sets, numerous quadrotor aerial vehicles and a flight environment with VICON. We also have a UR5 robotic arm and work cell used for computer vision and human/robot interaction. I am helping to develop an environment to test high speed optical flow based machine control.

Timo Stoffregen

Monash University, Australia

Timo started his PhD at Monash University in 2017, exploring new methods and algorithms to make use of the highly temporally resolved data produced by neuromorphic camera sensors with the aim of hardware accelerating these for use in real time applications. His work aims to improve on the RV2 Centre research project by exploring the use of novel visual sensors. Prior to his work at Monash, he completed his undergraduate studies at the University of Bremen, Germany and worked at the German Research Centre for Artificial Intelligence (DFKI), as well as taking a two year break to travel through South America and Europe. In his spare time, Timo is a keen hiker and likes to spend as much time as he can outdoors and is a volunteer with Bush Search and Rescue.

Pieter van Goor

Australian National University (ANU), Australia

Peter completed his Bachelor of Engineer (Reseach & Development) (Honours) and Bachelor of Science at ANU in 2018, majoring in Mechatronics and Mathematics respectively. In 2017 he worked as a student intern at Data61 at CSIRO in Brisbane. His engineering honours thesis, which he completed in 2017, looked at non-linear multi-agent system control theory using unit quaternions.

In October 2018 Peter commenced his PhD in non-linear observer theory under the supervision of Rob Mahony at the ANU. He is researching fast geometric observers for non-linear control problems, such as SLAM, with a focus on fast and computationally inexpensive implementations on mobile robots. In this research, he is also looking at novel hardware options for implementing these systems.

Sean O’Brien

Australian National University (ANU), Australia

Sean joined the centre in 2016 under the supervision of Jochen Trumpf, Rob Mahony, and Viorela Ila. He received his Bachelor of Engineering (Honours) in mechatronics and Bachelor of Science in mathematics at ANU in 2015. Presently, Sean is looking at applying the theory of infinite-dimensional observers to various dense sensing modalities, including light-field cameras. He has hopes that new, efficient algorithms will emerge from his research that will assist in certain robotic vision applications such as real-time depth mapping and odometry estimation.

Cedric Scheerlinck

Australian National University

Cedric completed his Master of Engineering (Mechanical) at the University of Melbourne in 2016. In 2015 he worked as a research assistant in the Fluid Dynamics lab at Melbourne before completing an exchange semester at ETH Zurich. His final year Masters thesis was a combined project with the University of Melbourne and The Northern Hospital, performing computational fluid dynamics studies on patient-specific coronary arteries.

In 2017 Cedric commenced his PhD in robotic vision under the supervision of Chief Investigator Rob Mahony at ANU. His PhD topic is high speed image reconstruction and deep learning with event cameras.

Key Results

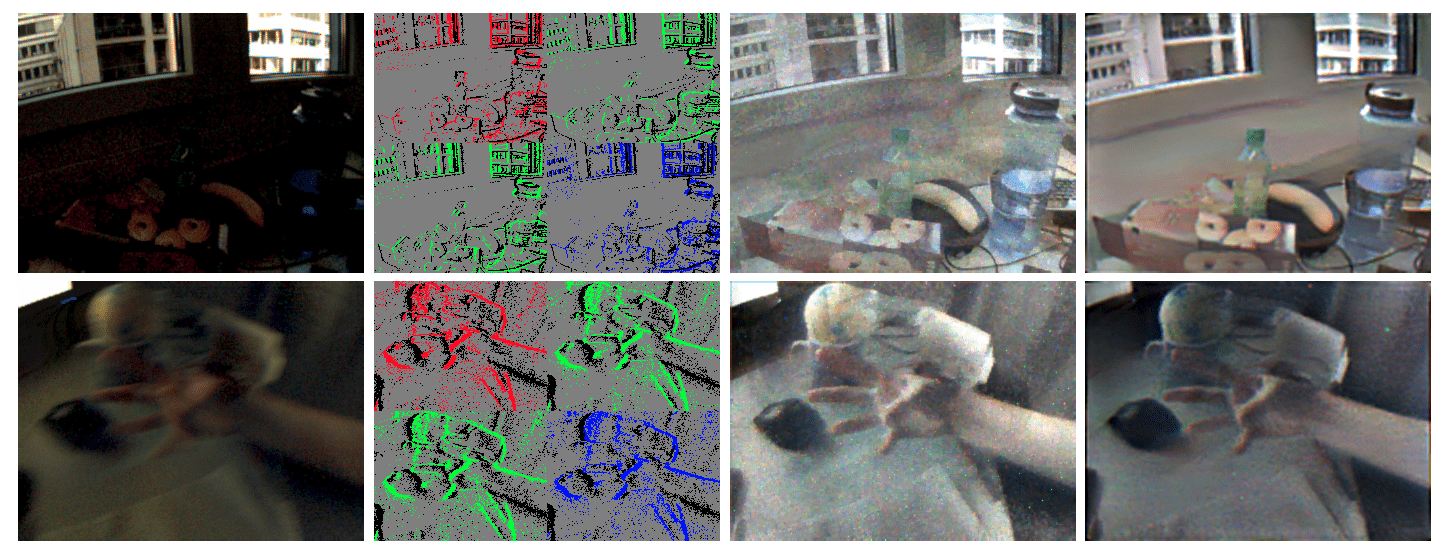

Novel Hardware: Event cameras are bio-inspired vision sensors that report the time and position of pixels that change, rather than a regular sequence of images like a conventional camera. They have high dynamic range, do not experience motion blur, and have latency in the order of microseconds. These properties make them ideal for robotic vision applications. However, the output is sparse and asynchronous, which requires novel processing paradigms conceptually different from those for conventional cameras.

PhD researchers Cedric Scheerlick (ANU) and Timo Stoffregen (Monash) along with CIs Mahony (ANU) and Drummond (Monash), have developed architectures and training paradigms to build state-of-the-art CNNs for image reconstruction and optic flow computation from event camera data streams. These algorithms demonstrate good quality reconstruction for situations where existing algorithms fail, specifically those with very different time scales (bursting a balloon), or imaging an almost static scene (with very few events). The project has also applied both filter based reconstruction method and CNN methods to colour event cameras.

Research Fellow Yonhon Ng (ANU) and honours students Ziwei Wang and Takuma Adams (ANU) are continuing the development of event filters for image reconstruction and estimation of optical flow (or normal flow) from event cameras. This will be particularly of interest in the case where the event stream is processed to remove the effect of camera rotation, so the remaining optical flow will be associated with motion in the scene. A final research direction is the development of algorithms to track image features directly from event data.

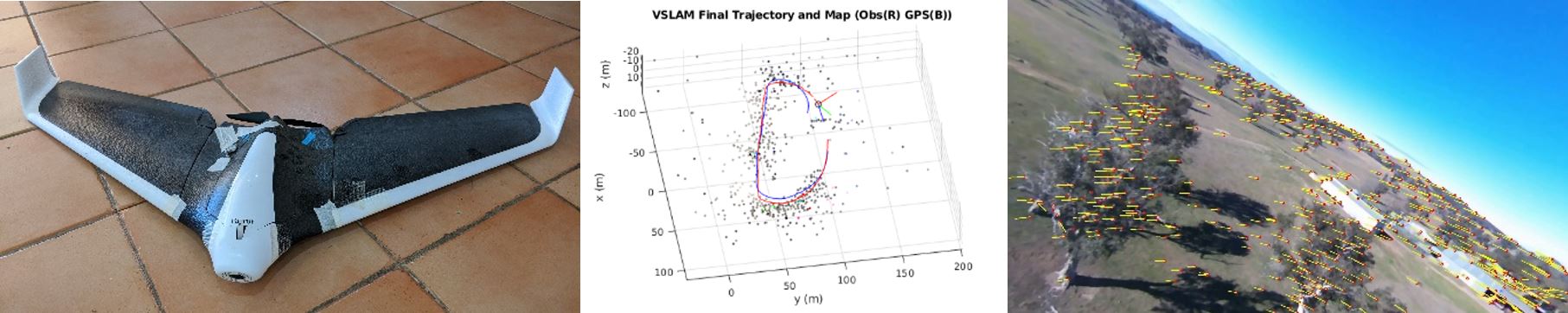

Visual Odometry: PhD researcher Pieter van Goor (ANU) and research fellow Yonhon Ng (ANU) along with CI Mahony (ANU) have developed a novel theoretical framework for Visual Odometry (VO) and Simultaneous Localisation And Mapping (SLAM). The approach exploits new global symmetries discovered for vision-based sensors as well as recent advances in equivariant observer design. We have derived visual odometry algorithms using classical constructive nonlinear design methods (demonstrated at RoboVis 2019) and applied this to data from an aerial robotic vehicle.

The team has contributed to the geometry of second-order equivariant observers, and this opens the door to Visual Inertial Odometry problems where information from Inertial Measurement Unit is available.

Activity Plan for 2020

- Develop a suite of metrics and problems to demonstrate the robustness of real-world operation of geometric SLAM algorithms.

- Develop a suite of algorithms for VO/VIO/SLAM based on these geometries. Distribute open-source code and demonstrate this code on aerial vehicles integrated with the ArduPilot software community.

- Further develop the filter solutions for event/frame cameras. Develop open-source code for high-quality image reconstruction, optic flow reconstruction, and robust feature tracking algorithms.