Fast Visual Motion Control

A key capability for the next wave of robotic systems is the ability to move dynamically and naturally in a complex environment guided by information from cameras.

Team Members

Nick Barnes

Australian National University

Nick Barnes is an Associate Investigator in the Centre. He received a PhD in computer vision for robot navigation in 1999 from the University of Melbourne. He was a visiting research fellow at the LIRA-Lab at the University of Genoa, Italy, supported by an Achiever Award from the Queens’ Trust for Young Australians before returning to Australia to lecture at the University of Melbourne, before joining NICTA’s Canberra Research Laboratory, now known as Data61@CSIRO. He became a senior principal researcher and led the Computer Vision Research Group from 2006-2016. Over this period, it grew to be a national research group with more than 25 research staff. In 2016 he moved to ANU part-time, then full-time in 2019.

He is currently an Associate Professor in the Research School of Electrical, Energy and Materials Engineering at ANU. His research interests include 3D vision, visual saliency, probabilistic weakly-supervised approaches dense prediction and vision processing for prosthetic vision.

Jochen Trumpf

Australian National University

Jochen Trumpf received the Dipl.-Math. and Dr. rer. nat. degrees in mathematics from the University of Wuerzburg, Germany, in 1997 and 2003, respectively. He is currently the Director of the Software Innovation Institute at ANU.

His research interests include observer theory and design, linear systems theory and optimisation on manifolds with applications in robotics, computer vision and wireless communication.

Tom Drummond

Monash University

Tom Drummond is a Professor and Head of the Department of Electrical and Computer Systems Engineering at Monash University. He is also the Monash Node Leader and sits on the Executive Committee of the ARC Centre of Excellence for Robotic Vision. He has been awarded the Könderink prize and the IEE International Symposium on Mixed and Augmented Reality (ISMAR) 10 year impact award.

He studied a BA in mathematics at the University of Cambridge. In 1989 he emigrated to Australia and worked for CSIRO in Melbourne for four years before moving to Perth for his PhD in Computer Science at Curtin University. In 1998 he returned to the University of Cambridge as a Postdoctoral Research Associate and in 1991 was appointed to the position of Lecturer. In 2010 he returned to Melbourne and took up a Professorship at Monash University. His research is principally in the field of real-time computer vision (ie processing of information from a video camera in a computer in real-time typically at frame rate), machine learning and robust methods. These have applications in augmented reality, robotics, assistive technologies for visually impaired users as well as medical imaging. During his time at both the University of Cambridge and Monash University he has been awarded research and industry grands in excess of $30M AUD.

Yonhon Ng

Australian National University

Yonhon joined the Centre as a Research Fellow at the ANU in January 2019. He works on the Fast Visual Motion Control project under the supervision of Professor Robert Mahony. He completed his PhD degree at ANU under the supervision of Associate Professor Jonghyuk Kim, Professor Brad Yu and Professor Hongdong Li. He also obtained his Bachelor of Engineering (R&D) at ANU and was awarded a University Medal.

Yonhon’s research interests include equivariant observer, 3D computer vision and robotics. He enjoys playing badminton, fishing and cooking during the weekend.

Alex Martin

Australian National University

Alex started with ANU in the Computer Vision and Robotics group lead by Prof Rob Mahony in 2008. He has been involved with projects including machine vision and learning, multi camera array sensors, high speed optical flow hardware on quad rotors and human/collaborative robot interaction. The lab he works in has a 4WD vehicle that is used for acquiring various data sets, numerous quadrotor aerial vehicles and a flight environment with VICON. They also have various robotic manipulators and a work cell used for computer vision and human/robot interaction.

On a day-to-day basis Alex works on projects as well as managing the lab administration, WHS and safe working procedures, procurement as well as emergency fast turnaround hardware repair and fabrication. He conducts student supervision as well as student and staff inductions into the workshop and workspace area. In his spare time he wrestles his young children, improves his photographic skills and works on various art and design projects.

Timo Stoffregen

Monash University

Timo started his PhD at Monash University in 2017, exploring new methods and algorithms to make use of the highly temporally resolved data produced by neuromorphic camera sensors with the aim of hardware accelerating these for use in real time applications. His work aims to improve on the RV2 Centre research project by exploring the use of novel visual sensors. Prior to his work at Monash, he completed his undergraduate studies at the University of Bremen, Germany and worked at the German Research Centre for Artificial Intelligence (DFKI), as well as taking a two year break to travel through South America and Europe. In his spare time, Timo is a keen hiker and likes to spend as much time as he can outdoors and is a volunteer with Bush Search and Rescue.

Pieter van Goor

Australian National University

Pieter completed his Bachelor of Engineering (Research & Development) (Honours) and Bachelor of Science at ANU in 2018, majoring in Mechatronics and Mathematics, respectively. In 2017 he worked as a student intern at Data61 at CSIRO in Brisbane. His engineering honours thesis, completed in 2017, studied non-linear multi-agent system formation control using unit quaternions.

In October 2018 Pieter commenced his PhD in non-linear observer theory under the supervision of Rob Mahony at ANU. He is researching geometric observers for non-linear control problems, such as SLAM, with a focus on applying Lie group symmetries and implementation on mobile robots. In this research, he is also looking at novel hardware options for implementing these systems.

Sean O’Brien

Australian National University

Sean joined the Centre in 2016 under the supervision of Jochen Trumpf, Rob Mahony, and Viorela Ila. He received his Bachelor of Engineering (Honours) in mechatronics and Bachelor of Science in mathematics at ANU in 2015. Presently, Sean is looking at applying the theory of infinite-dimensional observers to various dense sensing modalities, including light-field cameras. He has hopes that new, efficient algorithms will emerge from his research that will assist in certain robotic vision applications such as real-time depth mapping and odometry estimation.

Cedric Scheerlinck

Australian National University

Cedric completed his Master of Engineering (Mechanical) at the University of Melbourne in 2016. In 2015 he worked as a research assistant in the Fluid Dynamics lab at Melbourne before completing an exchange semester at ETH Zurich. His final year Masters thesis was a combined project with the University of Melbourne and The Northern Hospital, performing computational fluid dynamics studies on patient-specific coronary arteries.

In 2017 Cedric commenced his PhD in robotic vision under the supervision of Chief Investigator Rob Mahony at ANU. His PhD topic is high speed image reconstruction and deep learning with event cameras.

Project Aim

This aim of this project is to develop real-time (augmented) vision processing pipelines that will provide robust spatial awareness of the environment surrounding a cyber physical system and couple these representations into robust base level motion control. This capability is critical for the new wave of robots and cyber physical systems if they are to understand and move in a range of complex cluttered and unstructured environments full of other autonomous agents including humans and other animals.

Key Results

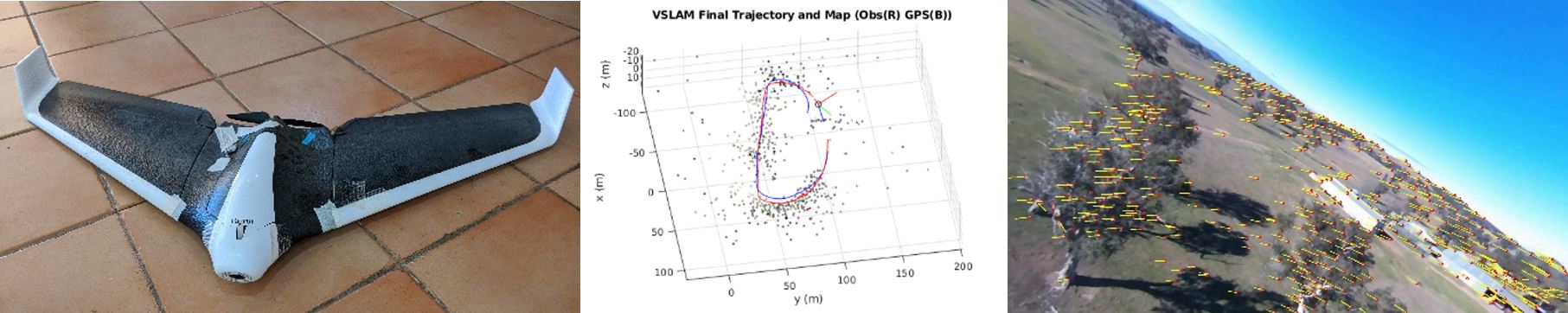

The problem of visual simultaneous localisation and mapping (VSLAM) is a foundational problem in the robotics community. This problem was originally solved using extended Kalman filter algorithms, however, these algorithms suffered a key limitation in that the linearisation used for propagation of the state covariance was only approximately computed and “baked in” error that could never be corrected into the estimates. Modern simultaneous localisation and mapping (SLAM) addresses this by keeping all data active in a graph and solving a nonlinear least squares problem, recomputing linearisation points as the estimates improve. The Fast Vision and Motion Control project has been working on a new filter paradigm based on equivariant symmetry. The project has discovered symmetries for a range of vision based problems including VSLAM, Visual Odometry (VO), Visual Inertial Odometry (VIO) and homography tracking. Systems with such symmetries are termed equivariant. Over the last 5 years, we have developed a general analysis framework for equivariant systems culminating in theory paper published on arXiv in 2021. This framework leads to a general filter we term the Equivariant Filter (EqF) based on linearisation of error dynamics defined using the symmetry properties. Crucially, the linearisation for equivariant error dynamics is exact, that is there is no approximation error, removing the key limitation of the original filter based SLAM solutions. We have applied this design approach to the VIO and benchmarked on the EuRoC VO data set. The results beat all state-of-the-art VO filters including ROVIO and SVO-MSF. This paper will appear in the 2021 International Conference on Robotics and Automation (ICRA) proceedings. The algorithm is less computationally heavy than its competitors and should lead to a new generation of VIO and VSLAM algorithms.

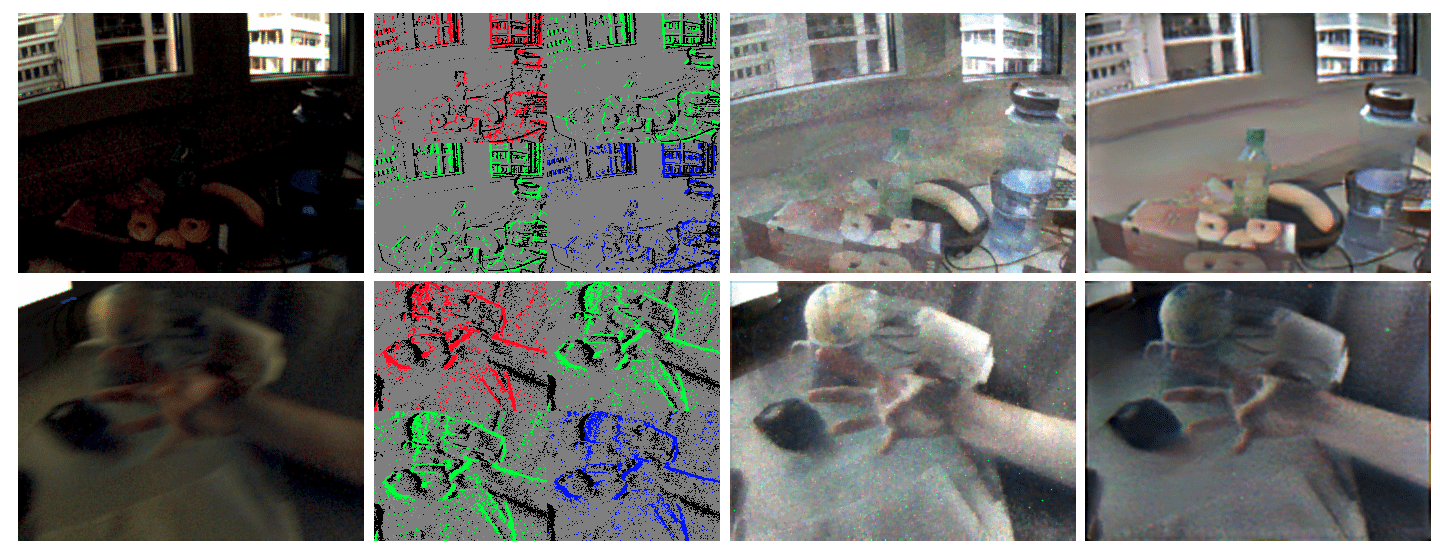

The project is also actively researching novel vision hardware, particularly event cameras. The project has developed an Asynchronous Kalman Filter (AKF) event camera image reconstruction. This filter uses a pixel-by-pixel scalar Riccati equation to adjust gains to fuse frame and event data to obtain clear high quality, high dynamic range images from a hybrid event-frame camera. In separate work we have design algorithms for flicker removal for event cameras based on a time delayed feedback at a pixel level to implement a linear “comb filter” that filters all harmonics of a tonic frequency. The filter is highly efficient at removing flicker from LED and fluorescent lights in event camera images. Continuing work on machine learning for event cameras has lead to a deep understanding of simulated data sets for event camera. Image reconstruction networks based on these new data sets show significant improvement in difficult reconstruction scenarios such as when there is little or now movement in the scene.

In terms of engineering contributions, the project has developed a new semi-dense patch matching feature tracker was developed. This new tracker was augmented to work with homography warping in order to improve the feature tracking and allow it to be integrated with the equivariant filter algorithms. In addition, a 2kg quadrotor was built to enable experiments to test vision algorithms for flying vehicles, where ground truth can be obtained from the VICON positioning system.

Feature image photo credit: luxizeng, E+, Getty Images